When I first started working at the nation’s largest refinery my boss didn’t have any great projects ready for me so he sent me to “Shift Super” training. There were only 4 of us students – me and 3 unit operators, each with at least 20 years of experience. I was only 19 years old and I barely knew anything about anything.

Each shift supervisor runs a big chunk of the refinery: 2-4 major units. But shift supers needed to know how to supervise any of the 10 or so control centers safely, so on my first day of work I was learning how the entire refinery operated. It felt like a lifetime’s education crammed into 5 days.

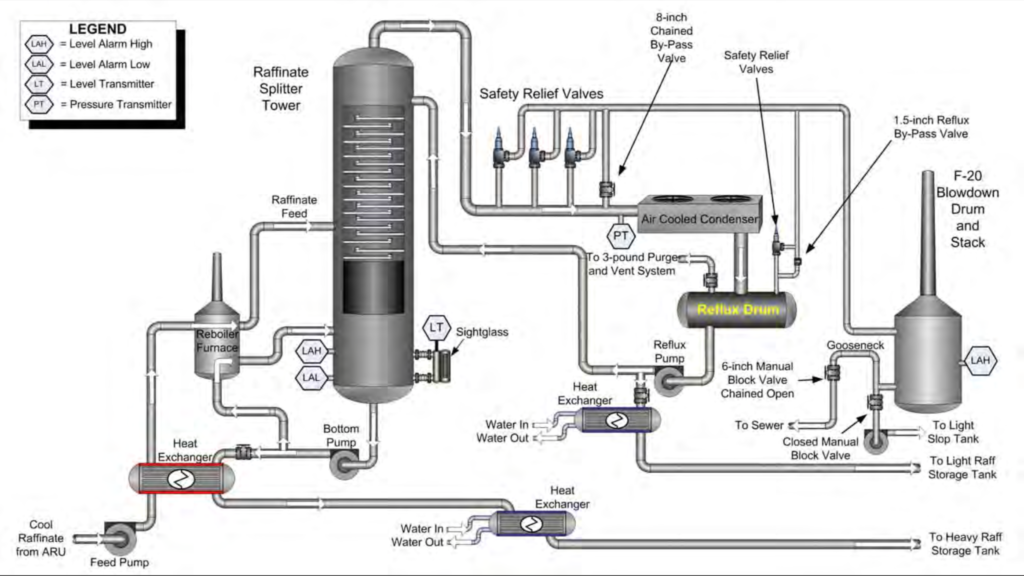

After Shift Super training, I began to see more and more of the refinery with my own eyes. What had been a precise line drawn from one perfect cylinder to another perfect cylinder was actually a rust covered 6-inch pipe baking in the Texas heat transporting high-octane, extremely flammable raffinate from a 170 ft separation tower to a temporary holding tank.

Fear of Disaster

One evening – about 2 weeks in – as the refinery was becoming a real place to me, I had this moment of pure panic while driving home.

With so many things that could go wrong at any moment, how was the refinery still standing? How had it even made it until now? Any minor mistake – in the design, production, construction, or operation of any pipe or vessel – could result in a huge disaster. Would the refinery even be there when I returned tomorrow morning?

I couldn’t sleep. The next morning the refinery was still there. And the next. And the next. And my fears slowly morphed into amazement. My refinery was the largest, most complicated system I had ever attempted to wrap my brain around.

BP Texas City Refinery Explosion

But just 29 miles away from my refinery, investigators were trying to piece together what happened during the nation’s worst industrial disaster in nearly 2 decades.

Fifteen people had been killed and 180 injured – dozens were very seriously injured. The cause of each fatality was the same: blunt force trauma.

Windows on houses and businesses were shattered three-quarters of a mile away and 43,000 people were asked to remain indoors while fires burned for hours. 200,000 square feet of the refinery was scorched. Units, tanks, pipes were destroyed and the total financial loss was over $1.5 billion.

The BP Texas City Refinery Explosion was a classic disaster. A series of engineering and communication mistakes led to a 170-foot separation tower in the ISOM unit being overfilled with tens of thousands of gallons of extremely flammable liquid raffinate – the component of gasoline that really gives it a kick. The unit was designed to operate with about 6,000 gallons of liquid raffinate so once the vessel was completely filled, 52,000 gallons of 200 °F raffinate rushed through various attached vapor systems. Hot raffinate spewed into the air in a 20 foot geyser. With a truck idling nearby, an explosion was immanent.

This video from the US Chemical Safety Board (CSB) is easy to consume and well done. The 9 minutes starting at 3:21 explain the Texas City incident in detail:

I’ve studied this and other disasters in detail because in order to prevent disasters we have to understand their anatomy.

The Trigger

The trigger is the most direct cause of a disaster and is usually pretty easy to identify. The spark that ignited the explosion. The iceberg that ruptured the ship’s hull. The levee breaches that flooded 80% of New Orleans.

But the trigger typically only tells a small part of the story and it usually generates more questions than answers: Why was there a spark? Why was highly flammable raffinate spewing everywhere? Why was there so much? What brought these explosive ingredients together after so many people had worked so hard to prevent situations exactly like this?

While the trigger is a critical piece of the puzzle, a thorough analysis of a disaster has to look at the bigger picture.

When The Stars Align

The word disaster describes rapidly occurring damage or destruction of natural or man-made origins. But the word disaster has its roots in the Italian word disastro, meaning “ill-starred” (dis + astro). The sentiment is that the positioning of the stars and planets is astrologically unfavorable.

One of the things I learned from pouring over the incident reports of the Texas City Explosion was that disasters tend to only happen when at least 3 or 4 mistakes are made back to back or simultaneously – when the stars align.

Complex systems typically account for obvious mistakes. But they less frequently account for several mistakes occurring simultaneously. The stars certainly aligned in the case of the Texas City Refinery Explosion:

- Employees and contractors were located in fragile wooden portable trailers near dangerous units that were about to start up.

- The start-up process for the ISOM unit began just after 2 AM, when workers were tired and conditions were not ideal.

- The start-up was done over an 11 hour period meaning that the procedure spanned a shift change – creating many opportunities for miscommunication. Unfortunately, the start-up could have easily been done during a single shift.

- At least one operator had worked 30 back to back 12 hour days because of the various turnaround activities at the refinery and BP’s cost-cutting measures.

- One liquid level indicator on the vessel that was being filled was only designed to work between a certain narrow range.

- Once the unit was filled above the indicator’s upper range, the indicator reports incorrect values near the upper range, misleading operators regarding the true conditions of the liquid level. (ie: at one point the level indicator would report that the liquid levels in the tower were only at 7.9 feet when they were actually over 150 ft)

- A backup high level alarm located above the level indicator failed to go off.

- The lead operator left the refinery an hour before his shift ended.

- Operators did not leave adequate or clear logs for one another meaning that knowledge failed to transfer between key players.

- The day shift supervisor arrived an hour late for his shift and therefore missed any opportunity for direct knowledge transfer.

- Start-up procedures were not followed and the tower was intentionally filled above the prescribed start-up level because doing so made subsequent steps more convenient for operators.

- The valve to let fluids out of the tower was not opened at the correct time even though the unit continued to be filled.

- The day shift supervisor left the refinery due to a family emergency and no qualified supervisor was present for the remainder of the unfolding disaster. A single operator was now operating all 3 units in a remote control center, including the ISOM unit that needed special attention during start-up.

- Operators tried various things to reduce the pressure at the top of the tower without understanding the circumstances in the tower. One of the things they tried – opening the valve that moved liquids from the bottom of the tower into storage tanks (a step that they had failed to do hours earlier) – caused very hot liquid from the tower to pass through a heat exchanger with the fluid entering the tower. This caused the temperature of the fluid entering the tower to spike, exacerbating the problems even further.

- The tower, which was never designed to be filled with more than a few feet of liquid, had now been filled to the very top – 170 feet. With no other place to go, liquid rushed into the vapor systems at the top of the tower.

- At this point, no one knew that the tower had been overfilled with boiling raffinate. The liquid level indicator read that the unit was only filled to 7.9 feet.

- Over the next 6 minutes, 52,000 gallons of nearly boiling, extremely flammable raffinate rushed out of the top of the unit and into adjacent systems – systems that were only designed to handle vapors, not liquids.

- Thousands of gallons of raffinate entered an antiquated system that vents hydrocarbon vapors directly to the atmosphere – called a blowdown drum.

- A final alarm – the high level alarm on the blowdown drum – failed to go off. But it was too late. Disaster was already immanent.

- Raffinate spewed from the top of the blowdown drum. The geyser was 3 feet wide and 20 feet tall. The hot raffinate instantly began to vaporize, turning into a huge flammable cloud.

- A truck, idling nearby, was the ignition source.

- The portable trailers were destroyed instantly by the blast wave and most of the people inside were killed. Fires raged for hours, delaying rescue efforts.

Man-made disasters don’t just happen in complex systems. The stars have to align. But the quality of the mistakes matters a lot. Had even one key error above been avoided or caught, this incident wouldn’t have happened. In this case, overfilling the unit by 150,000 gallons of nearly boiling flammable raffinate, set off a chain of events that guaranteed disaster.

The Snowball Effect

Not all mistakes are made equally. Several of the errors in the Texas City Refinery Explosion compounded: Had operators followed the start-up procedure and not filled the tower beyond the designed level, had the tower been better designed to communicate liquid levels over a broader range, had the valve draining the tower been opened at the correct time, had the operators communicated properly between shifts… Had any one of these mistakes been avoided, the tower wouldn’t have been over filled and this disaster would have been prevented.

Miscommunication errors seem to have a special way of compounding and spiraling out of control.

While preventing some of the other mistakes might have mitigated the damage done, failing to understand the quantity of raffinate in the tower ultimately caused the disaster at Texas City.

Preventing Disasters

Think about the complex systems you care about in your business and life. List the raw ingredients for a disaster. What information do decision makers and operators need in order to react appropriately?

Identify the singular points of failure and the obvious triggers. Brainstorm scenarios – both common and uncommon – in order to better understand how different mistakes could interact with one another and how they could snowball out of control.

Pay attention to both the system’s design and the human errors – especially communication errors – that will inevitably arise during normal operation. Think about how you can design the system to be more resilient without sacrificing too much efficiency. What brakes can you build into the process to slow down snowballs?

Where do you need warning alarms? What are the right set-points for each alarm? How vocal do the alarms need to be? What happens when the alarms fail? How often will you test or double check your alarms?

Summary

Disasters tend to happen within large and complex systems. Usually, the immediate cause of a disaster – the literal or figurative spark or trigger – can be readily identified. But there’s almost always a bigger picture, a series of mistakes and errors that led to that spark or gave that spark power. Some of those mistakes set off bigger and bigger problems, which can snowball into something truly catastrophic.

Bottom Line: Understanding the anatomy of a disaster in your world is the first step to designing better systems, procedures, and training to help mitigate damage or prevent disasters altogether.

Bonus Content

Hungry for a little more? Check out this patron-only bonus content on disasters.

Sources & Further Reading

If you’re really curious about the details of the Texas City Refinery Explosion, check out the 2 reports I read when studying the incident: 1) US Chemical Safety Board’s (CSB) 341 page report. 2) BP’s 192 page report from BP.